Cryptoeconomic Coprocessors and their applications

Hyperbridge introduced a new class of coprocessors that are secured by crypto-economic security. But what do all of these words even mean??

We’ll start our journey with the constraints of blockchain scalability. Typically blockchains are built to be single threaded. This severely limits the throughput of computation that can happen onchain. So we need to perform these computations off-chain (somehow?) and report them back onchain with proofs. If we simply report back the data without any proofs, we no longer have a coprocessor, we’ve effectively created an oracle. Which has a very drastically different security model. This is an important distinction to make because the absence of proofs is what sets apart unbreakable security from the centralized point of failures that we sought to escape by constructing decentralized infrastructure.

Ok seems fair enough, what are our options for doing this? Well for one we can pursue the ideal gas equation coprocessor model and attempt to achieve cryptographic proofs of computation through SNARKs and related polynomial oracle protocols. Zero-knowledge tech for all of it’s hype isn’t cheap to run and is mostly infeasible for very complicated computations. (Sources [1], [2])

An alternative to zk coprocessors would be to offload the work to another blockchain, and have that blockchain leverage it’s finality and state proofs as evidence that the computation was done correctly, which is backed by it’s crypto-economic security. While this seems like it introduces added risk, The aim of this blog is to show that this actually much cheaper & faster than zk coprocessors with comparable levels of security.

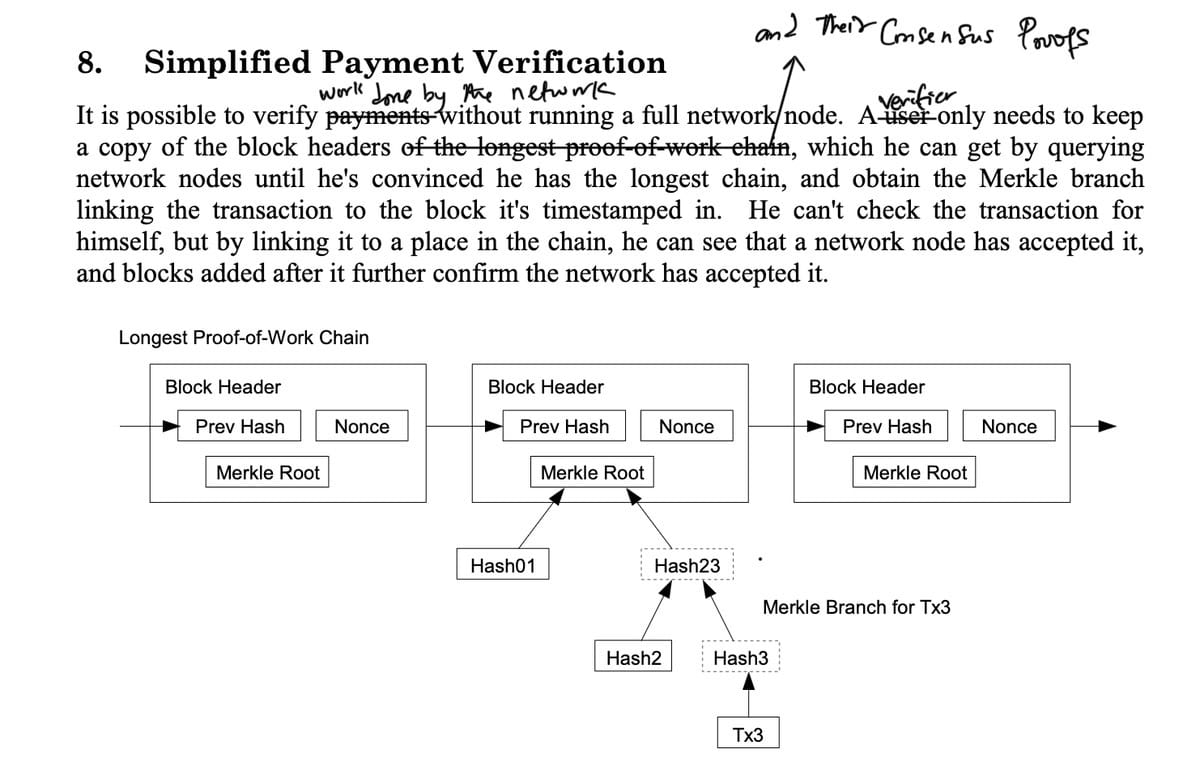

But first, how is all of this possible in the first place? This idea was already outlined in the Bitcoin white paper. Satoshi realised the need for a lighter way to verify the work done by a blockchain without requiring a full node. Without this, it would be impossible to achieve the necessary decentralisation to replace central banks of nation-states. This led to the development of the SPV protocol, which utilizes the full economic security of the blockchain. In a more generalised view, we can observe that the finality and state proofs of a blockchain are enough to convince any verifier that a claimed onchain computation, such as the transfer of bitcoins, has been performed.

Section 8. Bitcoin Whitepaper, S. Nakamoto.

If we understand that a blockchain is a deterministic state machine, which takes in inputs from user transactions and writes the output of it’s computations to it’s state (see state machine proofs) and that each new “state” is encoded in it’s headers. Then it’s clear how state proofs (typically merkle proofs of some kind) of the outputs of specific onchain programs, in combination with the finality proofs of headers of the blockchain can be used as a proof of computation.

In the context of a proof of stake blockchain, the consensus proofs are going to be signatures from the active validator set who are signing the latest finalized header in the chain. The header encodes the current state through the inclusion of the state root (typically a merkle root of some kind).

Ok, so why is it secure? The shorter answer is slashing. Proof of stake protocols require bonded stake from the active validators that can be taken away when they provably violate the protocol. The cardinal sin is double signing. It’s provable because double signing involves producing verifiable signature for two competing chains. Once reported onchain, it can cause the offending validators’ stake to be slashed. This disincentivizes any byzantine behaviour. The amount at stake by the active validator set of a proof of stake blockchain, is what is referred to as it’s crypto-economic security. It’s the amount validators must be willing to forfeit in order to produce finality proofs of invalid chains.

Furthermore, if the amount at stake is high enough, ideally in the billions, the possibility of double signing is completely eliminated. This means there can be diminishing returns to crypto-economic security. For example, Ethereum has $60 billion of crypto-economic security but secures over $400 billion in assets. According to the pundits' logic, compromising over $60 billion to gain $400 billion should be a bargain. However, in practice, this rarely happens. Consensus faults are easily detectable, therefore such attacks have a low chance of success.

The question now becomes, how can we obtain high crypto-economic security without having to bootstrap it from scratch?

Shared Cryptoeconomic Security

Now that we understand that a “crypto-economic coprocessor” is just syntactic sugar for onchain computation backed by high crypto-economic security. What are the ways one may obtain high enough crypto-economic security? For the most efficient distribution of crypto-economic security, shared security models allow for cheap economic security to be obtained for a general range of computation applications.

Ethereum

Even deeper in the rabbit hole, we can observe that blockchains are themselves verifiable coprocessors for the global internet. They process data that we do not trust a centralised authority to compute and so instead we’ve all decided to leverage a decentralised network of computers to compute the trades for our monkey pictures. In order for some computation to inherit economic of ethereum, this computation can only exist in the following forms

- A smart contract

Yes indeed smart contracts inherit the full security of ethereum, unfortunately their computational bandwidth is capped at 30m gas and cost too much money to run.

- An optimistic rollup

An optimistic rollup may provide high compute throughput but trades this in for high finality latency. These kinds of blockchains try inherit the economic security of the underlying L1 by being able to provably detect when things go wrong. They’re optimistic because instead of posting proofs of their computation, they simply provide all the necessary data required to check their computation. This leads to having a 7-day period where computations can be challenged before they are accepted as final.

- A ZK coprocessor

A ZK coprocessor, whether stateless (axiom, etc.) or stateful (zk-rollup), fully inherits economic security. However, it also trades off finality latency, which depends on prover time and proving costs. For most rollups, proving costs can take over a day. It is unlikely that zk coprocessor proving time will one day surpass Ethereum's finality time. ZK coprocessors are also bottlenecked by existing cryptography schemes, for example hash functions like keccak, blake2F, and ripemd. These functions are expensive within a zk circuit and consume hundreds of thousands of gates. This immediately disqualifies computation that makes use of a lot of cryptography.

Regardless of all of these options, Ethereum unfortunately has very expensive consensus proofs, this is because of it’s over eight hundred thousand validators who all produce signatures for its finality proofs. Verifying Ethereum’s consensus is too expensive to turn it into a coprocessor. This makes it very difficult to report the work done on Ethereum to other blockchains. From a practical perspective Ethereum itself cannot be a protocol that serves other protocols, it is in-fact the protocol whose capabilities must be extended, so that it may better serve it’s users.

EigenLayer

Eigenlayer is uniquely enabled by Ethereum’s proof of stake design. It allows for extending staked Ethereum with new slashing predicates. These new slashing predicates are for extraneous consensus systems. The term AVS itself is just a fancy name for a blockchain. Most notably Eigenlayer introduces a form of NPOS. In order to avoid an insanely large validator set for it’s secured chains, it opts for a delegation system where restakers delegate to operators who run the software needed to validate the secured blockchain. Backed fully by the delegators stake. This smaller set of of operators no doubt allow for cheaper proofs of consensus.

If an operator gets slashed on this blockchain, it’s delegators will be slashed on Ethereum mainnet. Ethereum itself right now has overtaken Bitcoin’s economic security by over 6 fold, here’s some napkin math by Justin Drake.

economic security flippening

— Justin Ðrake 🦇🔊 (@drakefjustin) November 24, 2023

⬛⬛⬛⬛⬛⬛ 600%

Ethereum

• 28.3M ETH staked

• $2,121 per ETH

→ $60B economic security

Bitcoin

• 500M TH/s hashrate

• $20 per TH/s*

→ $10B economic security

*$20 per TH/s should be a conservative upper bound. Bitmain sold the S21 in bulk…

Going by this, if EigenLayer simply consumes all of staked ETH and not more, any blockchain it validates instantly also has the highest cryptoeconomic security. This is an interesting dynamic whose consequences haven’t been fully understood yet. This fully decouples Ethereum as an asset from Ethereum as a technology platform. It becomes very likely the case that we will see more technologically advanced platforms secured by Ethereum restakers and who’ll earn more revenue than only on Ethereum. Also worth noting that there’s nothing stopping Eigenlayer from accepting unstaked ETH which further compounds the amount of crypto-economic security that it’s applications can access beyond the economic security that Ethereum itself has.

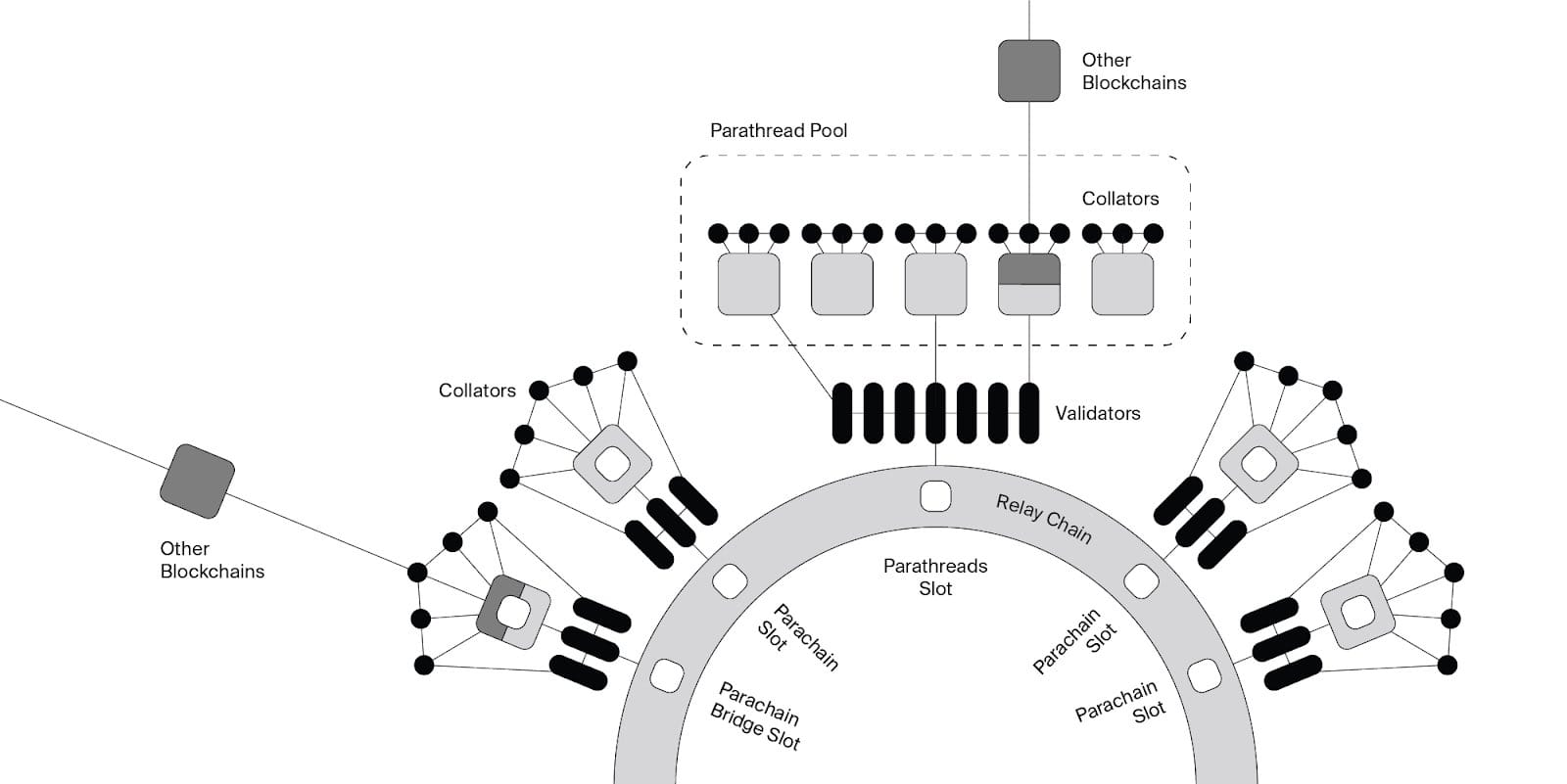

Polkadot

It’s the case that the industry is just starting to catch up to the ideas that Polkadot has put forth years ago. Ideas like shared crypto-economic security through Parachains. But how is is this security enforced for parachains? What is the mechanism that puts the amount of Polkadot staked behind each parachain block?

It’s enforced using availability & backing committees (see parachains consensus). Tl;Dr is that unlike Ethereum which does not validate it’s rollups’ blocks. Polkadot actually checks parachain blocks for validity and it is currently doing so for around 50 parachains. First the availability committee makes the parachain block data available to all validators through erasure coding, next it assigns a randomly selected backing committee to reconstruct parachain block data and re-execute it. If an invalid block is finalized then both committees are liable to be slashed. Because the entire set can re-execute the block and have them slashed. This architecture means interoperability within parachains is fully secured by the relay chain’s economic security. Polkadot also has cheap consensus proofs through the apk proofs scheme.

Platforms like Polkadot & Eigenlayer are in my view the only platforms that provide cheap, shared crypto-economic security for building blockchain coprocessors. Although EigenLayer at the time of writing isn’t live yet.

Applications

Shared crypto-economic security increases the bandwidth of verifiable computation that can be performed onchain thereby unlocking a new class of blockchains: Crypto-economic Coprocessors. These coprocessors can also be seen as meta-protocols. Protocols that serve other protocols and not users directly. This is because they’re extending the capabilities of other protocols in a non-competitive manner.

Data availability coprocessors

We are already witnessing the emergence of Data Availability as a service, which also falls into the category of crypto-economic coprocessors. These coprocessors primarily offer data processing capabilities. For the uninitiated, Data availability refers to the act of making certain data accessible for retrieval at a later time. This has applications in the rollup scalability paradigm. Given that Ethereum has limited data bandwidth, this allows external blockchains to supplement Ethereum's data capacity.

These types of coprocessors function by continuously sending their consensus proofs to Ethereum. Where they can then provide state proofs to demonstrate the availability of the data blobs on their chains. Some examples of data availability coprocessors include EigenDA, Avail, and Celestia. Because they use their consensus proofs as data availability proofs, It is evident that these coprocessors must maintain a high crypto-economic security to safeguard the integrity of the rollups that depend on them.

Today we're excited to announce Blobstream X: our ZK light client based implementation of Blobstream, which streams @CelestiaOrg's high throughput, verifiable DA layer to rollups settled on Ethereum Mainnet. pic.twitter.com/4KnXTIph2O

— Succinct (@SuccinctLabs) October 20, 2023

Today, we’re excited to announce our partnership with @AvailProject in the development of Vector X: a ZK light client that secures Avail’s data attestation bridge to Ethereum. pic.twitter.com/DpRL7kV5g5

— Succinct (@SuccinctLabs) November 29, 2023

Unfortunately, Celestia & Avail both bootstrap their own crypto-economic security.

Application specific blockchains

With shared crypto-economic security, application specific blockchains become more viable. These blockchains serve specific purposes and are designed to cater to the needs of specific applications. But why would these application specific blockchains exist in the first place?

One of the main reasons is the scalability benefits that this architecture offers. Traditional blockchains are built to be single threaded, which severely limits the throughput of computation that can happen onchain. By offloading certain computations to application specific blockchains, the overall scalability of the system can be greatly improved. This allows for more efficient processing of transactions and operations within the application.

However, it's important to note that the benefits of application specific blockchains go beyond scalability. The tokenomic benefits play a crucial role as well. Firstly, tokens can be used for rewarding liveness (the ability to continuously operate without interruption) within the application. This incentivizes participants to actively engage with the platform and contribute to its growth.

In addition, transaction fees can be accrued to either token holders through token burning or to the DAO treasury. This creates a sustainable economic model where the value generated within the application can be distributed to the token holders or utilized for further development.

Moreover, application specific blockchains allow for token holder governance of the application code and treasury. This means that the token holders have the power to influence the decision-making process related to the development and management of the platform. They can propose and vote on changes, upgrades, and resource allocations, ensuring a more decentralized and community-driven approach to governance.

Interoperability Coprocessors

Conventionally, light client bridges have been built in a 1:1 fashion. In the sense that a light client only capable of verifying a single blockchain’s consensus is deployed on blockchain pairs. This clearly doesn’t scale as the number of light clients in the system grows exponentially as $n^2$.

Interoperability coprocessors perform the task of aggregating the consensus and state proofs required for secure interoperability. Producing a more scalable 1:N bridging infrastructure.

The notion that bridges can be secured through zk proofs of consensus is unfortunately misguided. As mentioned earlier, the security of blockchains and, by extension, bridges, depends on the amount at risk of slashing in the event of consensus misbehavior. With this new perspective, we can understand the benefits of having a bridge hub with a high enough crypto-economic security, as it aggregates the consensus of multiple chains into its state. Where it can then use it’s consensus proofs to stream the finalized states of its connected chains to any other blockchain.

General Computation over Onchain State

Armed with interoperability coprocessors as a verifiable source for onchain state (roots). We can now perform onchain computations over blockchain state further transforming them into more useful pieces of data. Take for example computing the TWAP prices of a given Uniswap pair on any chain. Powered by the interoperability coprocessor, this TWAP data can then be streamed to any chain.

Oracles?

Unfortunately, Oracles do not benefit from crypto-economic security because misbehaviours are unattributable. The reason for this is that there is no onchain computation that produces their outputs. As such, there’s no verifiable way to prove faults even when there are clearly faults. This essentially puts slashing on social consensus, rather than fair onchain logic. Typically, oracles will say that they attribute fault by looking at deviations from an idealised distribution. But this model is entirely probabilistic by definition. Unlike the deterministic nature of typical coprocessors, the probabilistic model employed by oracles introduces additional uncertainties and risk.

Furthermore, the absence of an onchain computation mechanism that precedes oracle outputs means that there is no verifiable proof of their accuracy or correctness. This lack of verifiability not only raises concerns about the reliability of the data provided by oracles but also makes it challenging to identify and rectify any faults or inaccuracies in a timely manner. In summary, the lack of transparency, accountability, and verifiability prevents oracle protocols from inheriting crypto-economic security.